ASR Robustness for Real-World User-Generated Audio

TL;DR - Improving speech recognition

Real-world creator audio is messy, and that’s exactly where many speech recognition systems break. Research done by Linguana’s team members shows how to dramatically improve transcription accuracy in noisy, in-the-wild conditions without retraining large models, making global localization more reliable at scale. The result: better transcripts, better translations, and higher-quality localized videos, even when the original audio isn’t studio-perfect.

Why reliable speech recognition is the foundation of high-quality global localization

Robust automatic speech recognition (ASR) is the difference between a speech-enabled product that works in the lab and one that works in the real world. In practice, speech is captured on consumer devices, in noisy spaces, at a distance from the microphone, and across a wide range of accents and speaking styles. Under these conditions, even strong modern ASR systems can degrade sharply - creating transcription errors that then ripple through downstream language workflows.

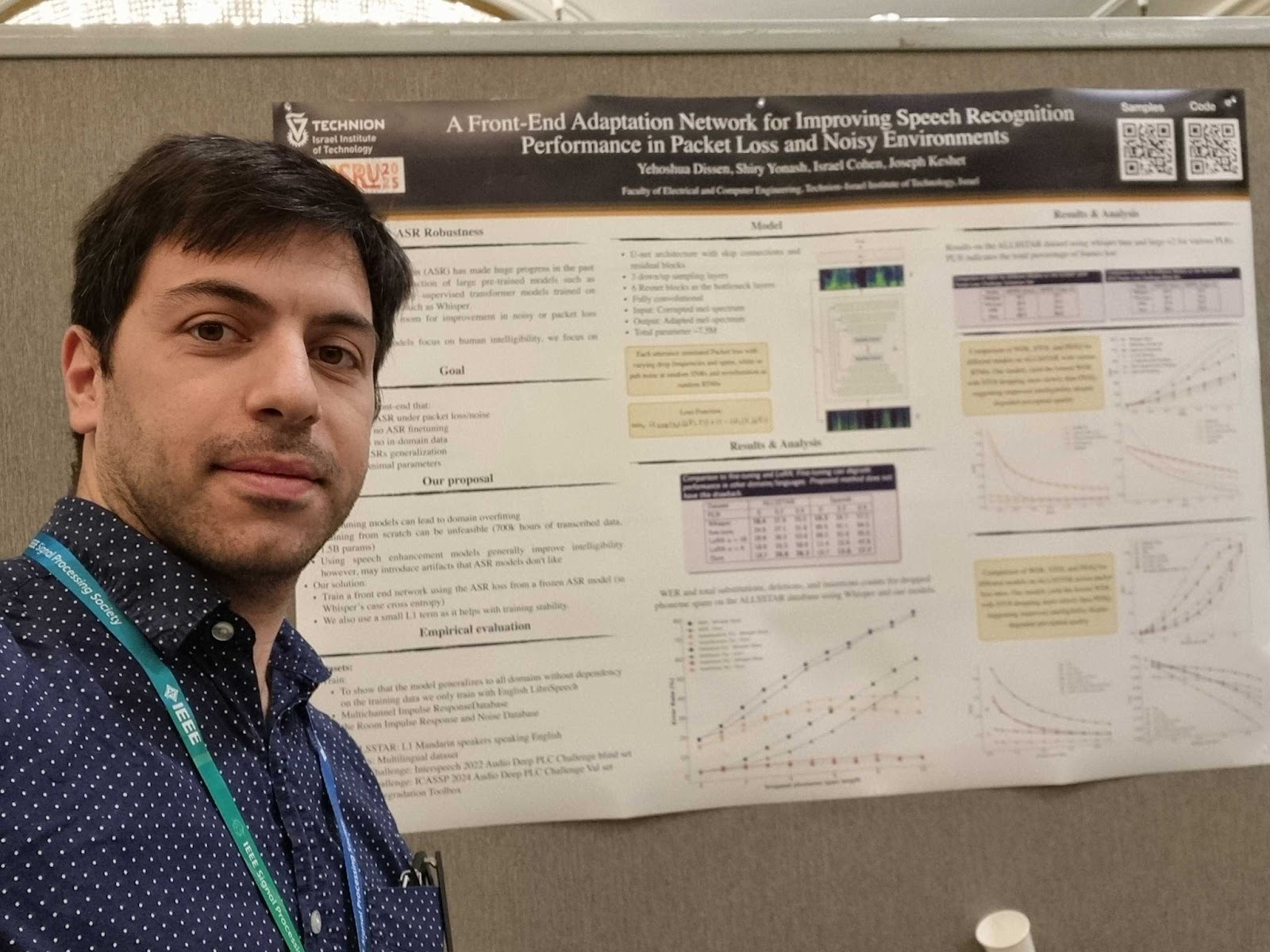

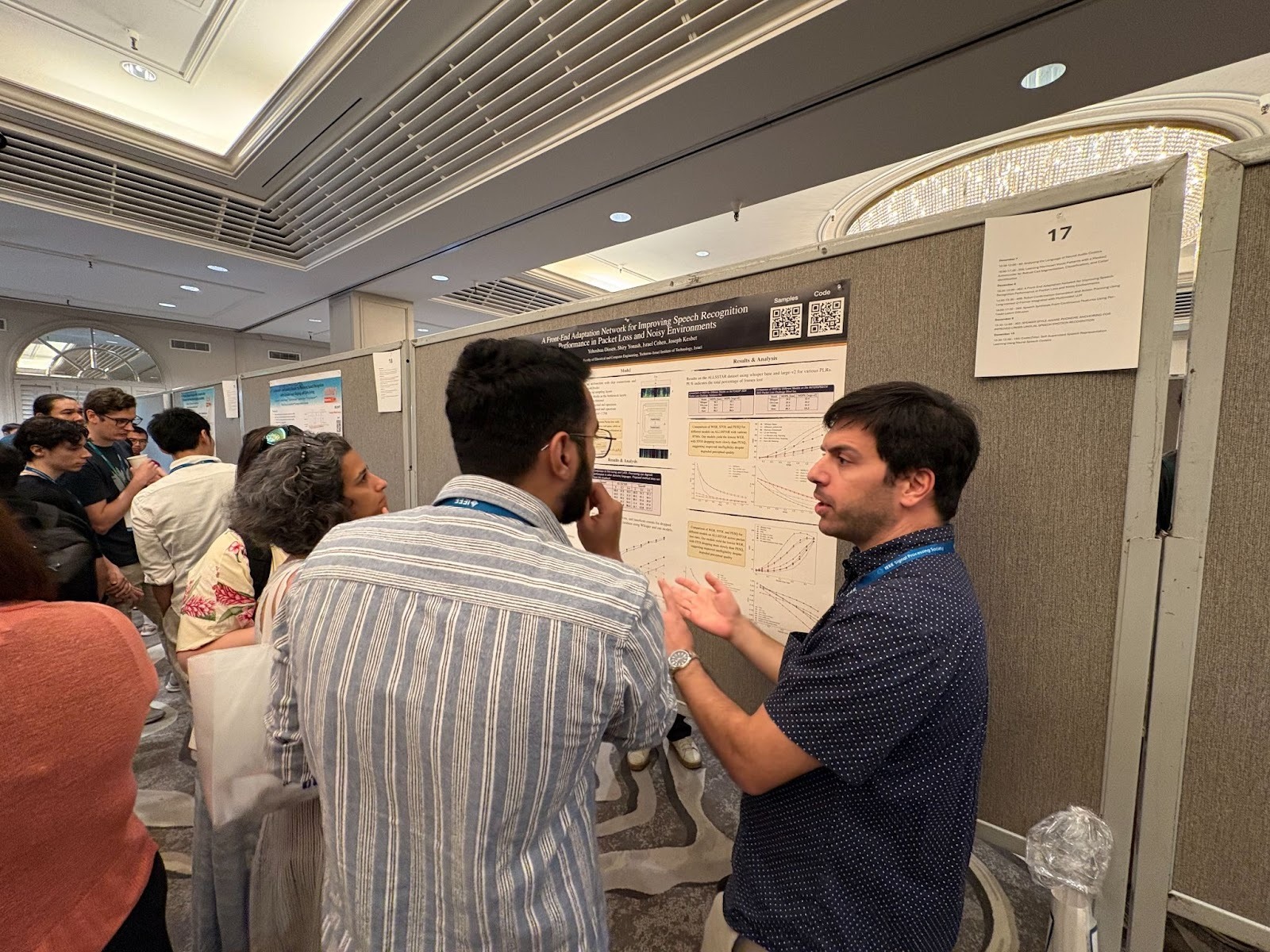

At the 2025 IEEE Automatic Speech Recognition and Understanding (ASRU) Workshop (December 6–10, 2025, Honolulu, Hawaii), I presented the paper, A Front-End Adaptation Network for Improving Speech Recognition Performance in Packet Loss and Noisy Environments (published in IEEE/ACM Transactions on Audio, Speech, and Language Processing) developed as part of my research at the Technion – Israel Institute of Technology. The work focuses on practical ways to make ASR more resilient to in-the-wild audio conditions such as background noise and reverberation, while also examining packet loss as an additional stressor in some delivery pipelines. Rather than proposing a new end-to-end ASR model, it shows a modular path to improved robustness while preserving the broad generalization that makes today’s pretrained models valuable.

A practical challenge is that the usual ways to “make ASR better” are often impractical for companies, especially small and mid-sized teams. Retraining a large foundational model is typically too expensive, and fine-tuning carries a risk: it can improve performance in the targeted condition while reducing performance in other environments or languages due to domain shift. This makes robustness improvements hard to deploy safely when you need broad generalization.

The paper's contribution is a modular strategy that improves robustness without retraining or fine-tuning the underlying ASR model. Instead of modifying the recognizer itself, the method adds a small “front-end” adaptation module that learns to reshape corrupted or noisy input into something the existing ASR model can handle better. This fits a common production constraint, and is production-friendly in practice: teams rely on a strong pretrained ASR model and want better results in messy real-world conditions without repeatedly retraining or revalidating the full system.

A key theme of the paper is that “speech enhancement” for humans is not the same as “speech enhancement” for machines. Traditional packet-loss concealment and noise suppression systems often optimize for perceptual quality, but they can introduce artifacts that hurt ASR. In contrast, our approach is explicitly optimized for transcription accuracy rather than how clean the audio sounds. The result is a robustness method aligned with the metric that matters to downstream language systems: getting the words right.

For a company like Linguana, the relevance is straightforward: much of the speech that creators publish as part of global, user-generated content is in-the-wild audio such as vlogs, travel clips, street conversations, outdoor recordings, phone-shot recordings, and other clips captured on consumer microphones in acoustically challenging spaces. In any workflow where speech is transcribed and then used for translation, subtitles, indexing, or QA, ASR becomes an upstream dependency. When ASR fails under acoustic stress (noise, reverberation, far-field microphones, wind, crowds), errors don’t stay isolated: they propagate into downstream language tasks. Improving robustness is therefore not just about “better transcripts”; it’s about more stable output quality, fewer user-facing mistakes in multilingual workflows, and more predictable performance across varied global conditions.

This work supports a pragmatic direction for products operating in this space: invest in robustness in a way that preserves generalization, avoids expensive retraining cycles, and targets what downstream language workflows ultimately need - reliable recognition from real-world speech, even when conditions are imperfect.

FAQ

Why does ASR robustness matter for creators?

Most creator content is recorded in real environments like streets, cars, outdoors, and noisy homes, not in studios. If transcription fails, errors cascade into translation and dubbing,, directly affecting quality and revenue.

What’s different about this approach?

Instead of retraining or fine-tuning massive ASR models, this method adds a lightweight front-end adaptation layer that improves performance in noisy conditions while preserving global language generalization.

How does this impact localization quality?

More accurate transcripts mean fewer translation errors, more natural dubbing, and more consistent results across languages, especially critical for long-form, dialogue-heavy, or technical content.

Why is this important at scale?

Creators publishing frequent new videos looking to reach new audiences across dozens of languages need accurate results. Linguana’s approach avoids costly retraining cycles and delivers production-ready robustness for millions of videos per month.